In 1951 Marvin Minsky teamed with Dean Edmonds build the first artificial neural network that simulated a rat finding its way through a maze.

They designed the first (40 neuron) neurocomputer, SNARC (Stochastic Neural Analog Reinforcement Computer), with synapses that adjusted their weights (measures of synaptic permeabilities) according to the success of performing a specified task (Hebbian learning) The machine was built of tubes, motors, and clutches, and it successfully modeled the behavior of a rat in a maze searching for food.

As a student, Minsky had dreamed of producing machines which could learn by providing them with memory "neurones" connected to "synapses"; the machine would also have to possess past memory in order to function efficiently when faced with different situations.

In 1951 the "machine" was born, consisting of a labyrinth of valves, small motors, gears and wires linking up the various "neurones". Some of these wires were connected up at random to the various memory banks in order to achieve a degree of causality of events. The reason such a machine had been put together was to try and find the exit from a maze where the machine would play the part of a rat whose progress would be monitored on a light network.

When the system was completed it was possible to follow all the movements of the 'rat' within the maze and it was only through a design fault that it was found more than one 'rat' could be introduced which would then interact together. After various casual attempts the rats started 'thinking' on a logical basis helped along by reinforcement of correct choices made and the more advanced rats would then be followed by the ones left behind. This first practical example, built by Minsky with the help of Dean Edmonds, also included numerous casual connections between its various 'neurones', acting like a sort of nervous system able to overcome any eventual information interruption due to one of the neurones failing.

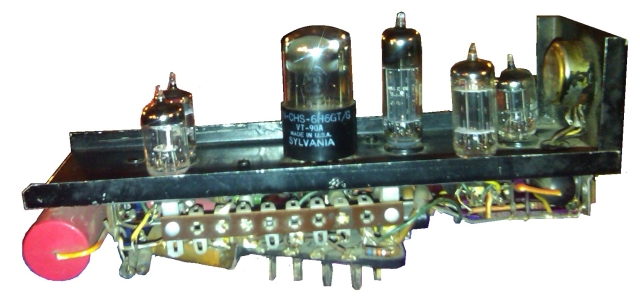

Image courtesy Gregory Loan:

Gregory visited Marvin Minsky and enquired about what happened to his maze-solving computer. Minsky replied that it was lent to some Dartmouth students and it was disassembled. However, he had one "neuron" left, and Gregory took a photo of it.

An extract from an interview Jeremy Bernstein did with Marvin Minsky – The New Yorker, Dec 14, 1981

p69

For a while, I studied topology, and then I ran into a young graduate student in physics named Dean Edmonds, who was a whiz at electronics. We began to build vacuum-tube circuits that did all sorts of things."

As an undergraduate, Minsky had begun to imagine building an electronic machine that could learn. He had become fascinated by a paper that had been written, in 1943, by Warren S. McCulloch, a neurophysiologist, and Walter Pitts, a mathematical prodigy. In this paper, McCulloch and Pitts created an abstract model of the brain cells—the neurons—and showed how they might be connected to carry out mental processes such as learning. Minsky now thought that the time might be ripe to try to create such a machine. "I told Edmonds that I thought it might be too hard to build," he said. "The one I then envisioned would have needed a lot of memory circuits. There would be electronic neurons connected by synapses that would determine when the neurons fired. The synapses would have various probabilities for conducting. But to reinforce 'success' one would have to have a way of changing these probabilities. There would have to be loops and cycles in the circuits so that the machine could remember traces of its past and adjust its behavior. I thought that if I could ever build such a machine I might get it to learn to run mazes through its electronics— like rats or something. I didn't think that it would be very intelligent. I thought it would work pretty well with about forty neurons. Edmonds and I worked out some circuits so that —in principle, at least—we could realize each of these neurons with just six vacuum tubes and a motor."

Minsky told George Miller, at Harvard, about the prospective design. "He said, 'Why don't we just try it?' " Minsky recalled. "He had a lot of faith in me, which I appreciated. Somehow, he managed to get a couple of thousand dollars from the Office of Naval Research, and in the summer of 1951 Dean Edmonds and I went up to Harvard and built our machine. It had three hundred tubes and a lot of motors. It needed some automatic electric clutches, which we machined ourselves. The memory of the machine as stored in the positions of its control knobs—forty of them—and when the machine was learning it used the clutches to adjust its own knobs. We used a surplus gyropilot from a B-24 bomber to move the clutches."

Minsky's machine was certainly one of the first electronic learning machines, and perhaps the very first one. In addition to its neurons and synapses and its internal memory loops, many of the networks were wired at random, so that it was impossible to predict what it would do. A "rat" would be created at some point in the network and would then set out to learn a path to some specified end point. First, it would proceed randomly, and then correct choices would be reinforced by making it easier for the machine to make this choice again—to increase the probability of its doing so. There was an arrangement of lights that allowed observers to follow the progress of the rat—or rats. "It turned out that because of an electronic accident in our design we could put two or three rats in the same maze and follow them all," Minsky told me. "The rats actually interacted with one another. If one of them found a good path, the others would tend to follow it. We sort of quit science for a while to watch the machine. We were amazed that it could have several activities going on at once in its little nervous system. Because of the random wiring, it had a sort of fail-safe characteristic. If one of the neurons wasn't working, it wouldn't make much of a difference —and, with nearly three hundred tubes and the thousands of connections we had soldered, there would usually be something wrong somewhere. In those days, even a radio set with twenty tubes tended to fail a lot. I don't think we ever debugged our machine completely, but that didn't matter. By having this crazy random design, it was almost sure to work, no matter how you built it."

Minsky went on, "My Harvard machine was basically Skinnerian, although Skinner, with whom I talked a great deal while I was building it, was never much interested in it. The unrewarded behavior of my machine was more or less random. This limited its learning ability. It could never formulate a plan. The next idea I had, which I worked on for my doctoral thesis, was to give the network a second memory, which remembered after a response what the stimulus had been. This enabled one to bring in the idea of prediction. If the machine or animal is confronted with a new situation, it can search its memory to see what would happen if it reacted in certain ways. If, say, there was an unpleasant association with a certain stimulus, then the machine could choose a different response. I had the naive idea that if one could build a big enough network, with enough memory loops, it might get lucky and acquire the ability to envision things in its head. This became a field of study later. It was called self-organizing random networks. Even today, I still get letters from young students who say, 'Why are you people trying to program intelligence? Why don't you try to find a way to build a nervous system that will just spontaneously create it?' Finally, I decided that either this was a bad idea or it would take thousands or millions of neurons to make it work, and I couldn't afford to try to build a machine like that."

I asked Minsky why it had not occurred to him to use a computer to simulate his machine. By this time, the first electronic digital computer— named ENIAC, for "electronic numerical integrator and calculator"—had been built, at the University of Pennsylvania's Moore School of Electrical Engineering; and the mathematician John von Neumann was completing work on a computer, the prototype of many present-day computers, at the Institute for Advanced Study. "I knew a little bit about computers," Minsky answered. "At Harvard, I had even taken a course with Howard Aiken"—one of the first computer designers. "Aiken had built an electromechanical machine in the early forties. It had only about a hundred memory registers, and even von Neumann's machine had only a thousand. On the one hand, I was afraid of the complexity of these machines. On the other hand, I thought that they weren't big enough to do anything interesting in the way of learning. In any case, I did my thesis on ideas about how the nervous system might learn.

To date, I have not been able to locate a diagram of SNARC. It's possibly in Minsky's thesis.

5 Replies to “1951 – SNARC Maze Solver – Minsky / Edmonds (American)”

Comments are closed.